Nhan (Nathan) Tran

Hi there! I am Nhan Tran (sounds like “Nyun”). I also go by Nathan.

I'm currently a Ph.D. student in Computer Science at Cornell University, advised by Professor Abe Davis.

I'm also pursuing a minor in Film and Video Production at Cornell's Performing and Media Arts program.

My research sits at the intersection of human-computer interaction, computer graphics, and computer vision. My work blends computational methods with my creative interests in computational photography and filmmaking.

In summer 2026, I'm a research intern at Google Research in NYC. In summer and fall 2025, I interned at Adobe Research.

Email: nhan at cs dot cornell dot edu

Publications

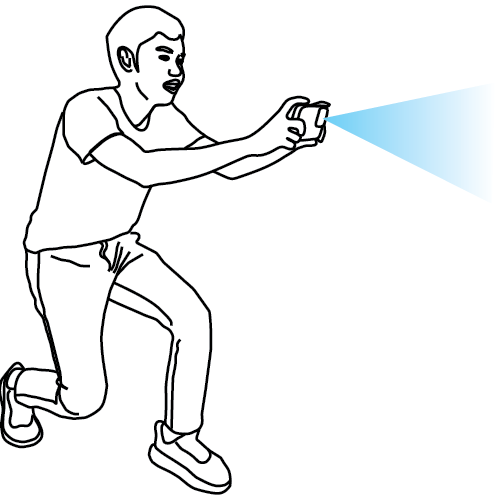

CineCraft: Unified Shot Planning, Capture, and Post-Processing for Mobile Cinematography

Nhan Tran, Sam Belliveau, Zixin Xu, Abe Davis

CHI 2026: ACM Conference on Human Factors in Computing Systems

Project

PDF

We present an interactive mobile application that supports the design, AR guided capture, and post-processing (stabilization, take management, and rough cut assembly) of cinematic shots on mobile devices, unifying filmmaking stages that traditionally require separate tools and personnel.

ARticulate: Interactive Visual Guidance for Demonstrated Rotational Degrees of Freedom in Mobile AR

Nhan Tran, Ethan Yang, Abe Davis

CHI 2025: ACM Conference on Human Factors in Computing Systems

Project

Video

PDF

We show that effective visual guidance for spatial configuration tasks depends on choosing intuitive frames of reference for different rotational degrees of freedom. We propose ARticulate, an interactive method for inferring these appropriate reference frames from brief user video demonstrations. To be presented in May 2025 in Yokohama, Japan. Stay tuned for more details!

Personal Time-Lapse

Nhan Tran, Ethan Yang, Angelique Taylor, Abe Davis

UIST 2024: ACM Conference on User Interface Software and Technology

Project (MeCapture.com)

PDF

Patent-pending, free iOS app with potential applications in telehealth for documenting long-term bodily changes. Users in 14 countries.

We present a mobile augmented reality tool that uses custom 3D tracking, interactive visual feedback, and computational imaging to capture personal time-lapses. These time-lapses approximate long-term videos of a subject (typically part of the user's body) under consistent viewpoint, pose, and lighting, providing a convenient way to document and visualize long-term changes in the body, with many potential applications in remote healthcare and telemedicine.

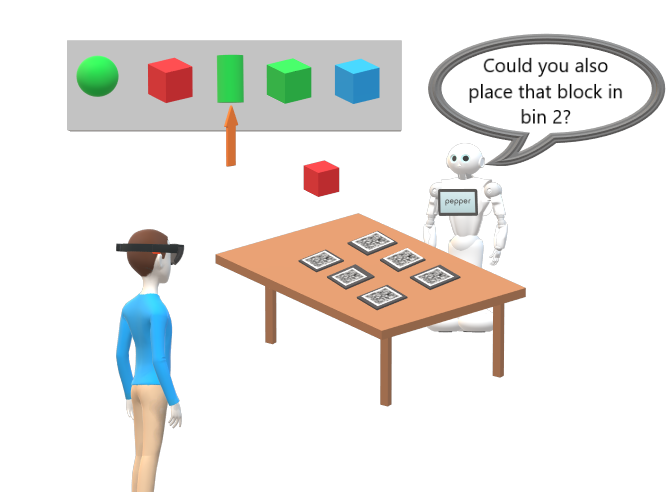

Now Look Here! ⇓ Mixed Reality Improves Robot Communication Without Cognitive Overload

Nhan Tran, Trevor Grant, Thao Phung, Leanne Hirshfield, Christopher Wickens, Tom Williams

HCI International Conference on Virtual, Augmented, and Mixed Reality (HCII 2023)

PDF

We explored whether the success of Mixed Reality Deictic Gestures for human-robot communication depends on a user's cognitive load, through an experiment grounded in theories of cognitive resources. We found these gestures provide benefits regardless of cognitive load, but only when paired with complex language. Our results suggest designers can use rich referring expressions with these gestures without overloading users.

What's The Point? Tradeoffs Between Effectiveness and Social Perception When Using Mixed Reality to Enhance Gesturally Limited Robots

Jared Hamilton, Thao Phung, Nhan Tran, Tom Williams

ACM/IEEE International Conference on Human-Robot Interaction (HRI 2021)

PDF

We present the first experiment analyzing the effectiveness of robot-generated mixed reality gestures using real robotic and mixed reality hardware. Our findings demonstrate how these gestures increase user effectiveness by decreasing user response time during visual search tasks, and show that robots can safely pair longer, more natural referring expressions with mixed reality gestures without worrying about cognitively overloading their interlocutors.

Adapting Mixed Reality Robot Communication to Mental Workload

Nhan Tran

HRI Pioneers Workshop at the International Conference on Human-Robot Interaction (HRI 2020)

★ HRI Pioneers ★

PDF

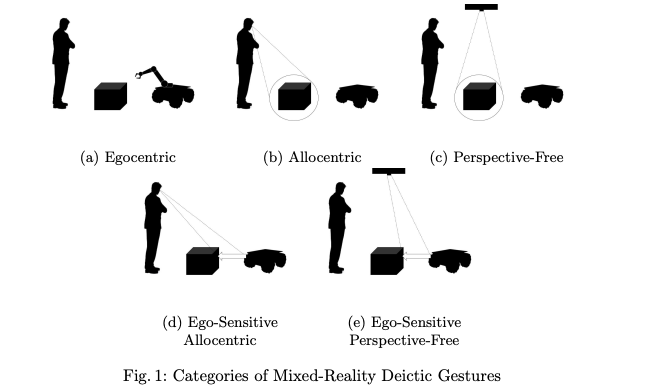

Mixed reality deictic gesture for multi-modal robot communication

Tom Williams and Matthew Bussing and Sebastian Cabrol and Elizabeth Boyle and Nhan Tran

ACM/IEEE International Conference on Human-Robot Interaction (HRI 2019)

PDF

We investigate human perception of videos simulating the display of allocentric gestures, in which robots circle their targets in users' fields of view. Our results suggest that this is an effective communication strategy, both in terms of objective accuracy and subjective perception, especially when paired with complex natural language references.

Augmented, mixed, and virtual reality enabling of robot deixis

Tom Williams, Nhan Tran, Josh Rands, Neil T Dantam

HCI International Conference on Virtual, Augmented, and Mixed Reality (2018)

PDF •

Humans use deictic gestures like pointing when interacting to help identify targets of interest. Research shows similar robot gestures enable effective human-robot interaction. We present a conceptual framework for mixed-reality deictic gestures and summarize our work using these techniques to advance robot-generated deixis state-of-the-art

Talks

April 2026

Oral presentation of CineCraft at CHI 2026 in Barcelona, SpainNov 2025

Invited speaker at Columbia University's Fall 2025 HCI Seminar.Aug 2025

Live demo MeCapture at SIGGRAPH2025 Appy Hour. Fun interview featured in SIGGRAPH blog.May 2025

Oral presentation of ARticulate at CHI 2025 in Yokohama, JapanApril 2025

Finalist at Cornell's Annual Eastman-Rice Persuasive Debate Contest. Won 2nd Place.Feb 2025

Invited Lightning Talk speaker at New York City Computer Vision DayJan 2025

Invited speaker at Cornell Tech XR Monthly Seminar (Faculty Host: Harald Haraldsson)Nov 2024

Invited speaker at Princeton University's Vision & Graphics Seminar PIXL Lunch (Faculty Host: Adam Finkelstein)Oct 2024

Finalist at Three Minute Thesis (3MT) Competition at Harvard Engineering's Ivy SymposiumOct 2024

Oral presentation of Personal Time-Lapse at UIST 2024 conferenceFilms & Videos

Outside of my research, creating videos has been a long-time creative outlet. Through Cornell's Cinematography program, I've had the chance to wear many hats—writer/director, director of cinematography, assistant camera (AC) operator, gaffer, lighting, art departments, and editor. These experiences have given me a deep appreciation for the filmmaking process, from scripting in pre-production, working with actors on set, to fine-tuning the edit in post-production. In many ways, these roles inform my research, motivating me to improve creative workflows and address the pain points that content creators face throughout the process.

Some of the films I worked on can be found below or on my YouTube channel. Please note, these are student productions with zero budget—but plenty of passion!

Misc Projects

Vision Slice Bot: Generalized Food Cutting with User Inputs

Full Demo VideoMy four classmates and I developed a vision-based, one-armed robot capable of tracking and manipulating user-specified food items for precise cutting tasks. Built on top of the open-vocabulary semantic segmentation model CLIPSeg, it can precisely track and cut a variety of fruits and vegetables. Our demo video shows it in action, detecting, grasping, moving, and cutting foods according to user prompts. Project in the graduate Robot Manipulation class taught by Prof. Tapo Bhattacharjee.

World GPT

West World inspired teaser videoBuilt in 6 hours at Cornell Tech's first AI Hackathon (April 2023) with 5 team members. We created a Unity virtual world where agents simulate memories, have unscripted conversations, and demonstrate emergent interactions using GPT-3. Before the real time live demo, we had 15 minute to put together a video here, inspired by HBO West World.

Robotic Medical Crash Cart

Video 1 (Hardware) Video 2 (Pilot Study)I led this project with a team of undergraduates to transform a medical crash cart used in hospitals into a smart robotic system as part of the Mobile Human-Robot Interaction class taught by Prof. Wendy Ju at Cornell Tech. The base is built on a modified hoverboard. On the perception side, we use the RealSense depth sensor to prototype the "follow me" interaction robot that carries medical supplies and follows designated user.

Wall Z 1.0

My friend Ryan and I built the Wall-Z robot, inspired by Disney's Wall-E, which uses on-edge processing with an Nvidia Jetson for ASL recognition, VR for remote environment visualization, and synchronizes its head movement with a VR headset.

Mixed-Reality Assistant for Medication Navigation and Tracking

CodeI built an embodied mixed reality assistant on the Microsoft HoloLens 1 that uses virtual interfaces to allow users to anchor where they placed their pill bottles, saves the locations in a map, and then when requested, projects an overlay of the shortest path from the user's current position to the saved anchor points.

3D-printed Mars Rover

VideoTeam project with the Mines Robotics Club. We built a tiny Mars rover to compete in the Colorado Space Grant Robotics Challenge. The robot used several proximity sensors to avoid obstacles, drive toward a beacon, and withstand the Mars-like environment of the Great Sand Dunes National Park.

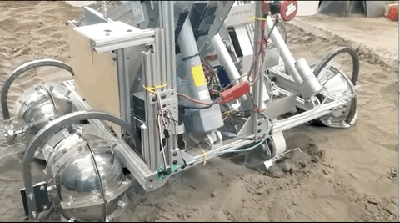

Blasterbotica: The Mining Bot at the NASA Robotic Mining Competition

VideoBuilt with the Colorado School of Mines’ Blasterbotica senior design team to compete in the NASA Robotic Mining Competition. This robot could traverse the arena, avoid obstacles, excavate regolith, and dump the collected regolith into the final collection bin. I was the youngest member working closely with another senior team member to implement ROS+OpenCV pipeline to detect obstacles and the collection bin.

Biped Robot v1.5 - A DIY Humanoid Walking Robot

VideoMy friend Arthur and I built this biped robot over a weekend. It was designed to imitate human walking, detect obstacles, and be operated using hand gestures. This was after watching the debut of the Atlas robot at Boston Dynamics. Through DIY, we learned that bipedal locomotion is hard!

Hailfire, a hand gesture-controlled robot

I was learning how to interface from the web to an Arduino using Cylon.js. This prototype showcases how a robot can be operated using JavaScript and an accelerometer. I gave a lightning talk at the 2016 O'Reilly Fluent Conference about this project.

Sir Mixer: An emotionally aware bartender robot

VideoMy roommate Patrick and I built an IoT drink mixer that is able to interpret the facial expressions of human users, infer their emotions, and then mix drinks accordingly.